Al from Oasis Global Partners recently approached me to deploy the Pimcore stack to the Alibaba Cloud. Truth be told this was my first time using this cloud provider and I had no experience to draw on. Fortunately, the cloud is heavily similar to AWS cloud, so prior experience there was helpful. This article will provide some tips to assist in the process of deploying Pimcore into the Alibaba cloud making use of Alibaba’s managed Kubernetes service. I will demonstrate how I enabled a CD workflow in this environment. The goal is to help web developers become more familiar with hosting on Alibaba, using modern web development practices. This article assumes you have some Docker and PHP experience.

I started with the official Docker image published by Pimcore. Github Link

Alibaba Services

I'll discuss each of the cloud services I used and how I configured them. Each cloud service is broken up into its own section. As a quick reference I used:

- Container Registry - Storing images

- ApsaraDB for RDS - Managed Database Service

- ApsaraDB for Redis - Our cache service

- SSL Certificates - For managing SSL Certs (Never knew about the trouble of ICP verification before!)

- NAS File System - Used for persistent storage of files

- Serverless Kubernetes - The puppet master for running the application

Container Registry

I started with Alibaba’s container registry, which is an environment used for storing Docker images. The first stages were to connect a source control system for building an image. In this case, I used Github. I designed a set of build rules to maintain tags for each build, connecting the branches with a few specific Docker tags. Below is the naming convention that I used for maintaining these associations.

| Git Branch | Docker Tag | | ---------- | ---------- | | master | latest | | developer | dev |

There were a few “gotcha” conditions, or problems that I needed to overcome to ensure that the workflow flowed properly.

Docker setup

First of all, I needed to ensure that the Dockerfile was in the root of the repository.

When the file was nested in a directory, while it was possible to switch the context to look for the file, as a result all associations must be relative to the file’s location when doing ADD/COPY commands. This may have been caused by a missing parameter in the build command but I'm not certain. It is possible that this will be fixed in the future, however at this point it seems like the easiest way to get the builds running is to put the Dockerfile in the root of the repository and adjust all ADD/COPY commands to be relative to this path.

Build Performance issues

When we look at the performance of the builds, the results appear to be all over the map.

In these images, the fourth column in the image refers to time to build in seconds. As we can see the time spent ranges from 18 to 1749. It’s worth noting that the 18-second build time isn’t really valid, since it occurred when a build was promoted from “dev” to “latest”.

We might, however, view this as beneficial. We can perform builds into the dev branch until we’re happy with the results. When the GIT commit is merged into master, since it is the same commit, the container registry will recognize this essentially as a clone of the DEV image and will copy it over to LATEST. As a result, the long running build processes don't need to occur. Our results have ranged from 444 second (about 7 minutes) to 1749 (or about 28 minutes). The build times seem to fluctuate considerably, and don't appear to be correlate to the actual contents of the Dockerfile. The build times appear to be associated with whatever resources Alibaba cloud has available at the time of the build. In fact, occasionally I have seen builds timeout (as is shown with the one that took over 4000 seconds).

Configuring the Settings

The ideal setup within Alibaba for these builds involves the following: I had to set the automatically build images when code changes property to on. Similarly, I had to make sure that I was using servers that are deployed outside of Mainland China, to ensure better performance:

Another thing that I had to be aware of is the importance of setting up triggers, after the cluster is installed. These are events that happen when a container image is finished building. Eventually these will be used to trigger redeployment of our cluster.

ApsaraDB for RDS

For the local MySQL server, I used ApsaraDB for RDS The Pimcore setup requires the following settings:

- Database Engine: MySql 5.7

- *Edition: High Availability (Alibaba Cloud claims this covers > 80% of business useage

- Storage Type: Local SSD

- Instance Type: rds.mysql.s1.small – 1 Core, 2GB RAM, 300 Max Connections

It’s important that your subscription is set for auto-renewal, otherwise it’s easy to accidentally lose the instance. While there are regular email reminders, this can still slip through the cracks.

Whitelist

The whitelist is similar to AWS’s security groups. Make sure to add the IP of any service that needs to access the database to this whitelist.

Innodb Large Prefix

Pimcore requires this setting to be on. Edit it under the instance Editable Parameters.

Finally, be sure to use utf8mb4 when creating the database, so that it is compatible with Pimcore.

ApsaraDB for Redis

I used ApsaraDB for Redis as Pimcore’s caching service. It is important to set it up in the same zone as all other services. Make sure to configure the whitelist settings so that it can be accessed much like the database.

The following settings worked fine in a low traffic setup:

- Edition: Community

- Version: Redis 5.0

- Architecture Type: Standard

- Instance Class: 1G (note: this goes a long way with a cache!)

You may need to tweak the parameters to better suit your environment.

SSL Certificates

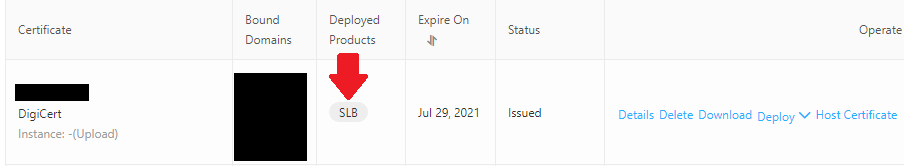

While you can purchase SSL certificates through Alibaba, they can be somewhat expensive. For this reason, it makes sense to purchase one externally and then upload it to Alibaba. Let’s cover how to handle this. When setting up the cluster, we will need to be able to access the certificate via SLB. The first step is to then deploy the certificate to SLB in the correct application region, as shown below:

Very Important: Be sure to keep a record of the certificate id; this will be needed later when you're setting up the SLB in the cluster. Note, that this is not the same id that appears on the details pane, as shown below:

To reiterate, the one showing is not the ID we need. To get the correct certificate id, we will need to make use of the Alibaba Cloud Command Line tools. You can either install the tools directly in your machine, or opt to put the tools in a Docker container. I believe this is a cleaner approach.

Use the following code snippet to create a Dockerfile with the tools installed:

FROM alpine:latest

# Add the jq tool for displaying command output in the JSON format.

RUN apk add --no-cache jq

# Obtain and install Alibaba Cloud CLI.

RUN wget https://aliyuncli.alicdn.com/aliyun-cli-linux-3.0.2-amd64.tgz

RUN tar -xvzf aliyun-cli-linux-3.0.2-amd64.tgz

RUN rm aliyun-cli-linux-3.0.2-amd64.tgz

RUN mv aliyun /usr/local/bin/

There are a few things to note. First, since this container doesn’t really have a command, we have to provide it a “stay running” command so we can log into the container and use the CLI tools. I accomplished this using the tail -F anything command.

Note command: tail -F anything to keep container running

Once the container is built and running, you can access it with: docker exec -it oasis-abc-cli sh

Before you can start using the CLI tools, you will need to set up a user via “Resource Access Management” inside of the cloud console to get access keys.

You can use the Create AccessKey button to generate a new set of keys. Be sure to securely store the output so the keys can be referenced in the future. They will only appear once in the portal.

Once connected run aliyun configure to set up credentials on the tools

Use AccessKey, SecretKey and Region Id to set up (region IDs can be found here: https://www.alibabacloud.com/help/doc-detail/40654.htm)

When you are successful, you should see the following output in the terminal:

After this is set up you can run the command: `aliyun slb DescribeServerCertificates``

This will give you a similar output (I ran the raw return through a JSON formatter to tidy it up):

Note that the security key you will need for setting up your SLB cluster will be the key that appears under ServerCertificateId above.

NAS File System

I used the Alibaba NAS file system for the persistent disk. This is where any assets or other important files for Pimcore will be stored. One of the advantages of this is that it provides a true NFS file system, which makes it possible to use clustered deployments. This is essentially our Docker Volume.

There are few important items I need to stress to ensure that it works properly.

First, it’s important to make sure you create your files in the same region as all of the other files. Secondly, much like in the other services offered, it’s a very good idea to set your subscription to auto-renew so that you do not accidentally lose the services. You will want to make sure you are using the following settings:

- Storage Type: Capacity Type (this seemed adequate for our performance needs)

- Protocol: NFS

- Capacity: Start at 100GB

Next we will also want to add a mount target. This is the file path on the disk which can be used so that one disk has multiple mount points shared across a multitude of applications. In my case, I chose to create a “root” level mount point, and treat the disk much in the same way that I would a physical disk.

Serverless Container Service

The Serverless Container Service provides the brains of the entire application. It serves to create other Alibaba cloud services. You can monitor these services on their respective pages inside the Alibaba portal, while only managing the service directly through the cluster. It’s worth noting that this “feature” can cause problems, and I have been bitten by this a few times. Changes will temporarily persist; however, the cluster is essentially the puppet master for the system. You can make temporary changes at the individual service level, but the cluster will always overwrite them eventually.

A few helpful terms in both Kubernetes and Alibaba vernacular:

Pods - Elastic Container Instance

Services - Server Load Balancer

To remote into the container, we can look it up in the Elastic Container Instance section and use the web UI.

Configuring the Container

Under Container Configuration, we can set which environment variables are passed into the container. This is also where we will set up the NAS network mount points, like so:

Here are the environment variables I use in a typical Dockerized Pimcore app:

- ENABLE_PERSIST: Boolean to map in a persistent NFS drive using symlinks on container boot

- PIMCORE_ENVIRONMENT: the environment name

- DatabaseIP: hostname / Ip of RDS/DB server

- DatabasePort: Port of DB

- DatabaseName: Name of db on db server

- DatabaseUser: Database User Name

- DatabasePassword: Password for user

- RedisIP: IP/hostname of Redis server

- RedisDatabase: The number of the DB used on the redis server

- DEBUG_MODE: Controls whether PIM goes into debug mode or not

- DatabaseVersion: The SQL version of MySQL we’re using

I ended up setting all of these as container environment variables. From there, the Symfony config was populated with the values using the Symfony environment variable syntax.

Pulling the Docker Image

To configure our containers, we will need to pull our image from the registry we set up earlier, like so:

Redeployment Triggers

This is the heart of the continuous deployment. We need to create a redeployment trigger so we can hook into the container registry and have the registry tell the cluster when a new image is ready.

When we are back in the container registry side, we can take this link address and put it into the trigger, like so:

Server Load Balancer:

As mentioned earlier, I ran into some struggles with both certificate ID and getting bandwidth to stick by modifying the SLB directly. To get the settings to stick, these are best modified via SLB annotations. Make sure to use the certificate ID you obtained from the CLI tools earlier!

Closing Notes

Throughout the entire process, there are several tips to keep in mind:

- Remember to set up all services in the same region / zone for optimal performance.

- Many services will “expire” if they aren't set to auto-renew. Make sure to look at settings when provisioning services, so as to prevent a headache down the road.

- In many ways, Alibaba Cloud feels like a replica of AWS. Try to draw on any AWS experience you have when you get stuck.